Taming the Data Explosion

NEXUS research pioneers new applications for quick, efficient and secure data management

By Jane Palmer

November, 2016

Each day hundreds, if not thousands, of NEXUS sensors record climate and environmental data at various points in Nevada. It’s information that will help NEXUS researchers in their goal to maximize the efficiency of solar energy while minimizing the impact on the environment, but this vast amount of data needs to be transported, stored and represented in a way that will help the scientists interpret it.

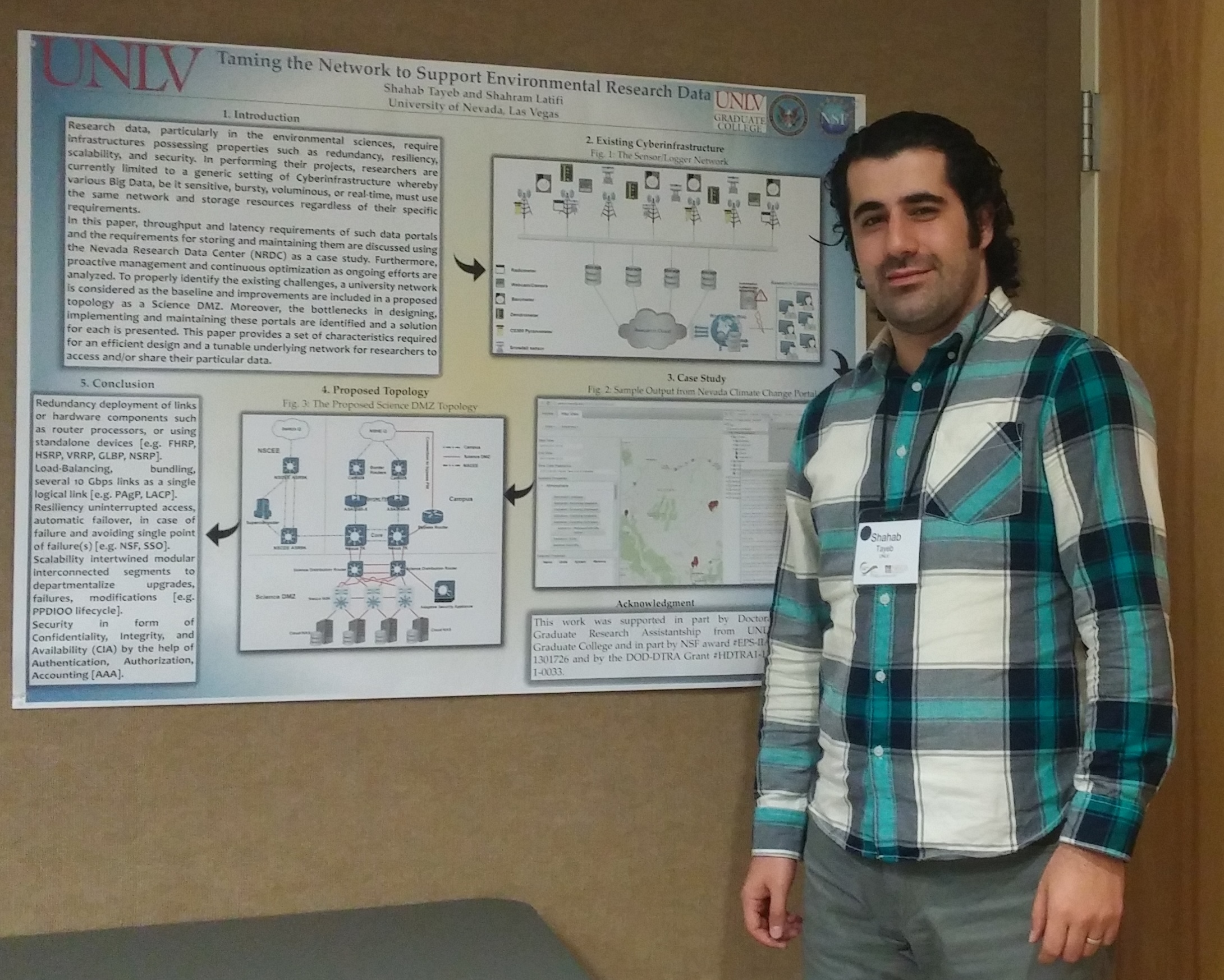

“Nexus research is based on the observations we make in the data that we see, and the conclusions that we draw,” says NEXUS researcher

Dr. Shahram Latifi, a professor in the department of electrical and computer engineering at the University of Nevada, Las Vegas (UNLV). “So by being able to process data efficiently, we, as scientists, begin to get a better idea of what works and what doesn’t.”

The mission of NEXUS cyberinfrastructure (CI) is to support scientific discovery by providing data management, communication and processing through the Nevada Research Data Center (NRDC). The challenge CI faces is that the volume of data is large, and continues to grow.

“Considering the fact that most of these data are picked up on a 24/7 basis constantly, year around, you can imagine that there’s going to be a lot of data that is collected,” says Latifi. “So taming this explosion of data calls for efficient mechanisms to transfer this voluminous data and store it.”

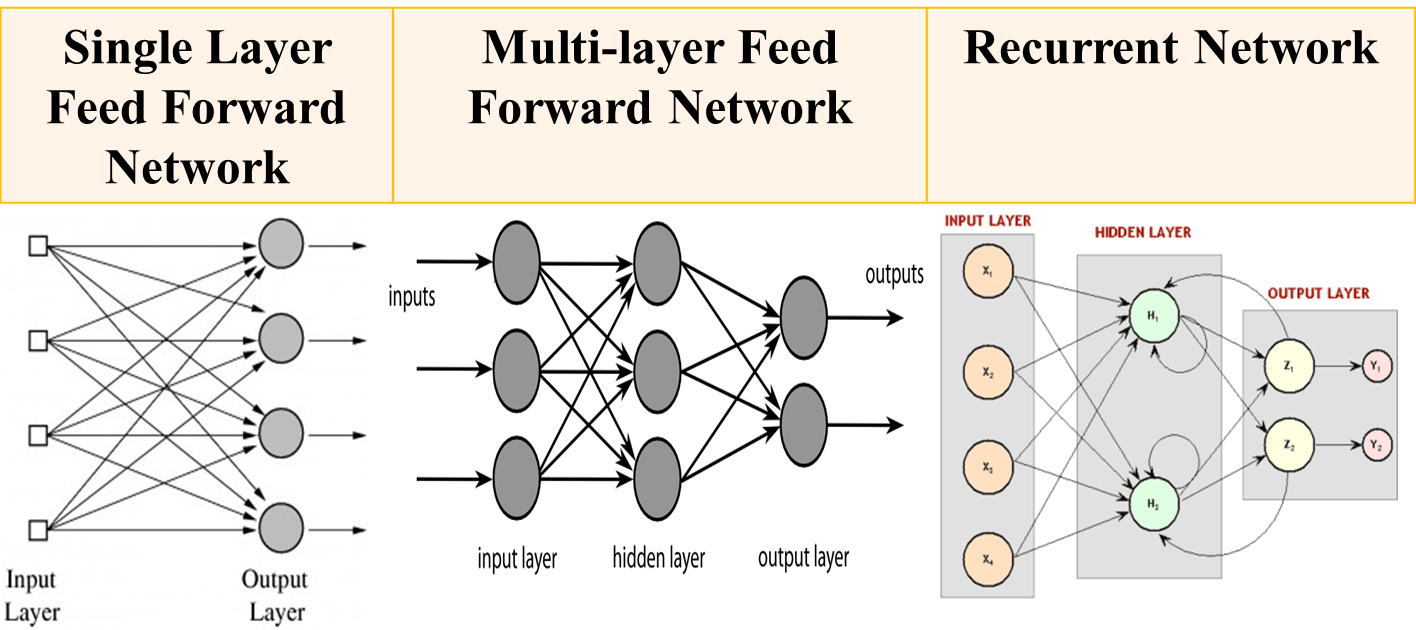

Types of Neural Networks used for Prediction

–S. Latifi

Predicting the Future

Perhaps the most fundamental aspect of data management is communicating and banking the recorded observations so that they can be accessed, analyzed and interpreted by scientists. But to do this for every recorded variable would be computationally unsustainable. To address this challenge, Latifi and his team have investigated data compression techniques: algorithms to reduce the amount of data that needs to be transferred and stored.

In particular the team have used neural network models, which, if they are fed large quantities of data, begin to recognize inherent patterns and trends in the information. These models effectively accumulate knowledge about how a particular system behaves. Using that stored knowledge, the model can then make accurate predictions about what type of data it might see in the future in certain circumstances. “It is like the adage that says the past is a good indication of the future,” Latifi says.

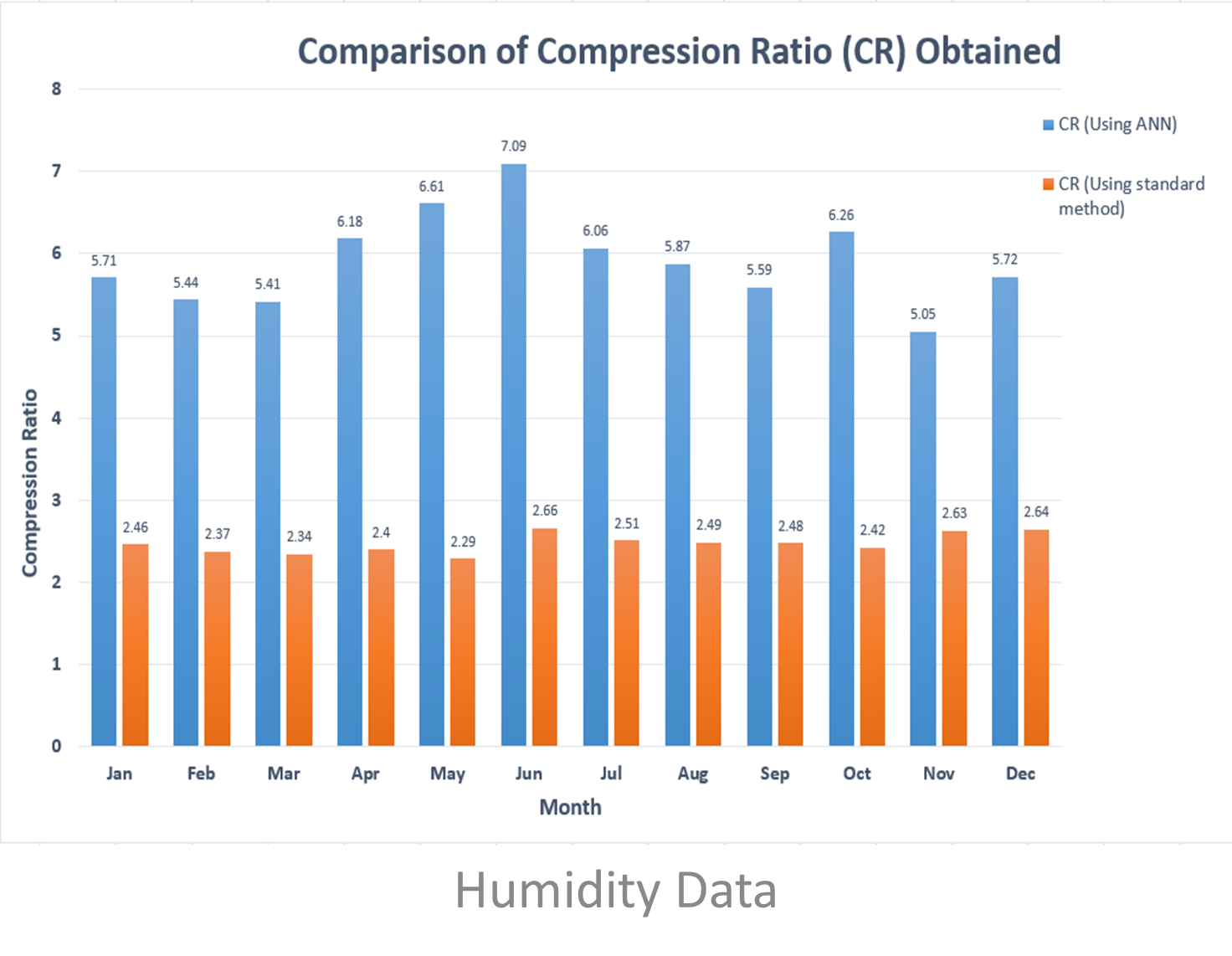

To “train” the model, Latifi and his team fed it large amounts of climate data, variables such as humidity and temperature observed at specific locations in Nevada. Then, after the network was fully trained, instead of transferring the new observations, the scientists simply programmed the model to predict what it believed those variables would be, based on past experience.

“The way the compression works is you guess what the data is going to be and then you send only the difference between the predicted and actual values,” Latifi says. “If you’re right on, the difference would be zero and you have nothing to send.” If the prediction doesn’t match the observations all that needs to be stored is the difference between the predicted and observed result, not the entire new recorded data set. “So by making a sophisticated network model, we tried to come to what the next sequence of data is, you know, as closely as possible, and then encode the error that we made,” Latifi says.

Using such a technique no information is lost in the compression. For such “lossless compression” typically the amount of data transferred is only reduced by a factor of two or three. But using the neural network model, the team has been able to achieve compression factors of eight or nine. “These compression ratios are phenomenal and should be able to save us lots of space in terms of storing the data and a lot of bandwidth in terms of communicating the data,” Latifi says.

Speedy and Secure Solutions

The team also focuses on facilitating quick data transmission from the field and between scientists. “Because these sensors pick up the data constantly you want to be able to transmit them on the fly,” Latifi says. “And you don’t want scientist A to wait forever to analyze the data sent by scientist B on another campus.”

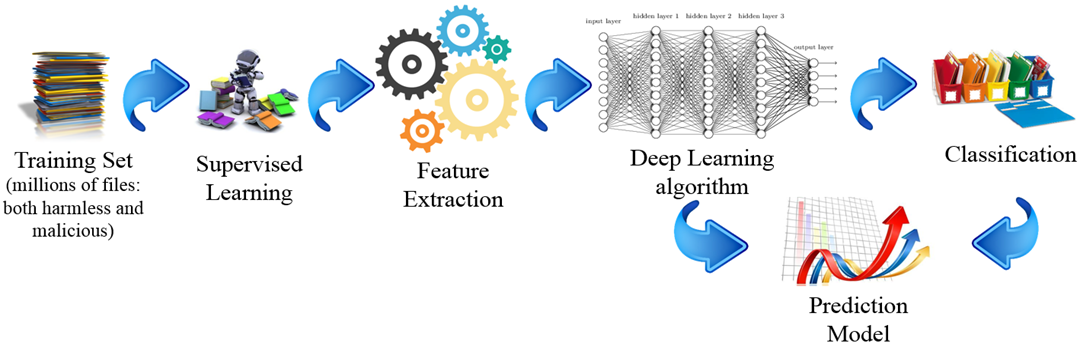

To facilitate such speedy data transfer requires better network protocols, a focus of Latifi’s research. But Latifi’s research also ensures that such high-speed communication would be as secure as possible. To achieve this aim the team uses algorithms to identify security breaches and block potential threats and improved network protocols that allow different layers of security for data transmission.

Investigating such techniques for fast and secure data transmission proves a passion for Latifi. “When you can send gigabytes of information in a blink of an eye or when you can compress data by a factor of two or three better than it is supposed to be it’s exciting,” Latifi says. “The fact that you can save so much storage and communication time.”

Ultimately, however, he derives great satisfaction from being able to serve the mission of the NEXUS project. “It’s just so exciting how we are able to connect different projects and bring people together and facilitate communication,” Latifi says.

Block Diagram of Deep Learning Applied to Threat Prediction in NEXUS Data

–S. Latifi